TensorBoard Analysis Thumb rules that work for me.

Thumb rule #1:

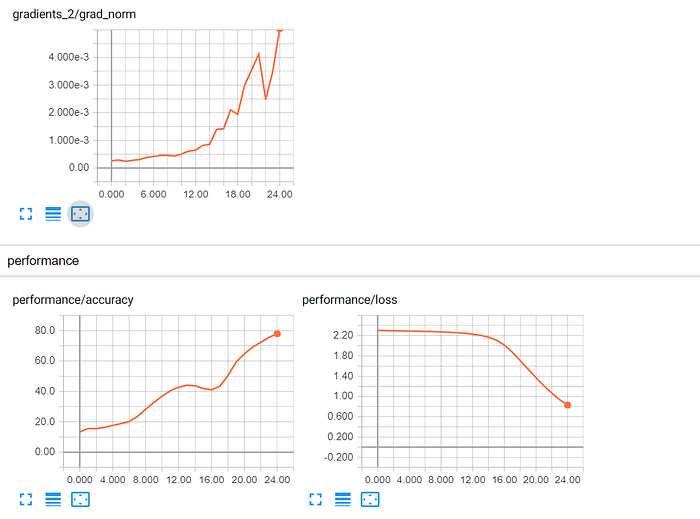

IF you can see that the accuracy is going up, but very slowly, and that the gradient updates are increasing over time. This is an odd behavior. If you’re reaching towards convergence, you should see the gradients diminishing (approaching zero), not increasing. But because the accuracy is going up, you’re on the right path. You probably need a higher learning rate.

Thumb rule #2:

Visualizing network weights is important, because if the weights are wildly jumping here and there during learning, it indicates something is wrong with the weight initialization or the learning rate.

Histograms for weights and biases:

The histograms use the function tf.summary.histogram(data, name). A histogram is basically a collection of values represented by the frequency/density that the value has in the collection. On Tensorboard, they are used to visualize the weights over time. It is important because it could give a hint that the weights initialization or the learning rate are wrong.

The last layer histogram for different learning rates

There are three dimensions in a histogram, the depth (y-dimension) is the epochs, the deeper (and darker) the older are the values. The z-dimension is the density of values represented at x-dimension.

Let’s learn by example:

It appears that the network hasn’t learned anything in the layers one to three. The last layer does change, so that means that there either may be something wrong with the gradients (if you’re tampering with them manually), you’re constraining learning to the last layer by optimizing only its weights or the last layer really ‘eats up’ all error. It could also be that only biases are learned. The network appears to learn something though, but it might not be using its full potential. More context would be needed here, but playing around with the learning rate (e.g. using a smaller one) might be worth a shot.

Now for layer1/weights, the plateau means that:

- most of the weights are in the range of -0.15 to 0.15

- it is (mostly) equally likely for a weight to have any of these values, i.e. they are (almost) uniformly distributed

Said differently, almost the same number of weights have the values -0.15, 0.0, 0.15 and everything in between. There are some weights having slightly smaller or higher values. So in short, this simply looks like the weights have been initialized using a uniform distribution with zero mean and value range -0.15..0.15 ... give or take. If you do indeed use uniform initialization, then this is typical when the network has not been trained yet.

NOTE: I will add to this story soon

References:

[1]https://stackoverflow.com/questions/42315202/understanding-tensorboard-weight-histograms